Live Coding Music with Machine Learning in the Browser

An ICCC’21 Tutorial

- Schedule

- •

- Dates

- •

- Organizers

NOTE: To access the Zoom meeting for the tutorial, please check the Schedule on the Underline platform (https://underline.io/events/178/reception)

With the advent of creative AI, we need toolkits that may support our pursuit for artistic endeavours and our needs of expression in a wide range of conditions and contexts, spanning co-located and the open, social or decentralized contexts of the Web. With new live coding approaches and languages infused with machine learning, we can tap into such affordances in new media and formats that support rapid, malleable and intuitive exploration.

In this tutorial, participants will have the opportunity to engage hands-on with sema, our browser-based system for musical live coding with AI. Participants will get introduced to the system and its languages, to how to use signal processing, machine learning and machine listening in the exciting domain of live coding musical performance.

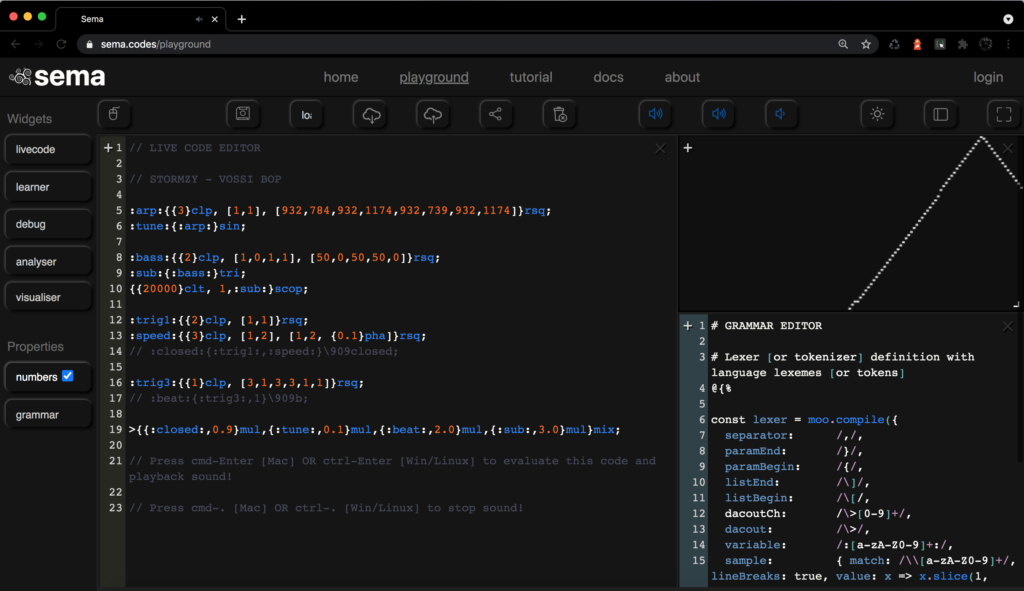

sema [1, 2, 5] (Figure 1) empowers musicians to write their own language, whether it is one piece of music, an instrument, a pattern generator, or a full-blown live coding language. We have been running both co-located and online workshops [3, 10], where participants used this new technology to perform and even design their own musical live coding languages with machine learning.

The tutorial will take place online, as part of the International Conference on Computational Creativity, on September 14-18, 2021.

Description

This tutorial will introduce the basic concepts of musical live coding with machine learning and get people up and running with Sema. It will run on a 3-hour long, synchronous Zoom session, without a limit for the number of participants. To participate in this tutorial, no previous experience required, either with sema or with music-making, more generally. Nonetheless, participants will benefit from beginner-level JavaScript programming skills. Participants will need to have a Chrome browser installation to run Sema. The tutorial will follow the following topics:

- General introduction and live coding in the browser using our sema languages [1 hour]

- Get familiarity with using machine learning as part of live coding [1 hour]

- Learning how to setup their own Sema instance or to incorporate the sema-engine [6] in participants’ own web applications [1 hour]

Tutorial participants will learn how to express sound and music concepts using some of the languages that were created in sema, such as the default language [6], Nibble [7], and Rubber Duckling [8]. Participants will experiment with selected machine learning models and machine listening classes in Javascript and Tensorflow.js and explore some of their applications, including FM synthesis, pattern detection and generation.

As a result of this tutorial, participants will gain understanding about workflows in sema, explore practical techniques for performing with the live code mini languages, including live coding with interactive machine learning and pre-trained generative models. We believe participants will gain a better understanding of what signal processing, machine learning machine listening are in the context of live coding language design and performance.

We are hoping that workshop participants can become members of the community that we are building around sema. The tutorial will also include a brief discussion about participants’ experience in learning and using the sema system, which help us to further refine the system.

Organizers

- Organizer 1 Francisco Bernardo (University College of London, UK)

Francisco Bernardo is a computer scientist and a multi-instrumentalist. His research focus on human-computer interaction approaches to toolkits that broaden and accelerate user innovation with interactive machine learning. Francisco has been working in applied research projects at the intersection of art and innovation, software engineering, interaction design and greenfield product management.

- Organizer 2 Chris Kiefer (University of Sussex, UK)

Chris Kiefer is a computer-musician and musical instrument designer, specialising in musician-computer interaction, physical computing, and machine learning. He performs with custom-made instruments including malleable interfaces, touch screen software, interactive sculptures and a modified self-resonating cello. Chris is an experience live-coder, performing under the name ‘Luuma’. He performs with Feedback Cell and Brain Dead Ensemble, and has released music with ChordPunch, Confront Recordings and Emute.

- Organizer 3 Thor Magnusson (University of Sussex, UK)

Thor Magnusson is a worker in rhythm, frequencies and intensities. His research interests include musical improvisation, new technologies for musical expression, live coding, musical notation and digital scores, artificial intelligence and computational creativity, programming education, and the philosophy of technology. These topics have come together in the ixiQuarks, ixi lang, and the Threnoscope live coding systems he has developed.

To contact the organizers please send an email to f.bernardo@ucl.ac.uk